System for Biometric Authorization of Internet Users

Based on Fusion of Face and Palmprint Features

| Home | Summary | Project Team | Results |

|

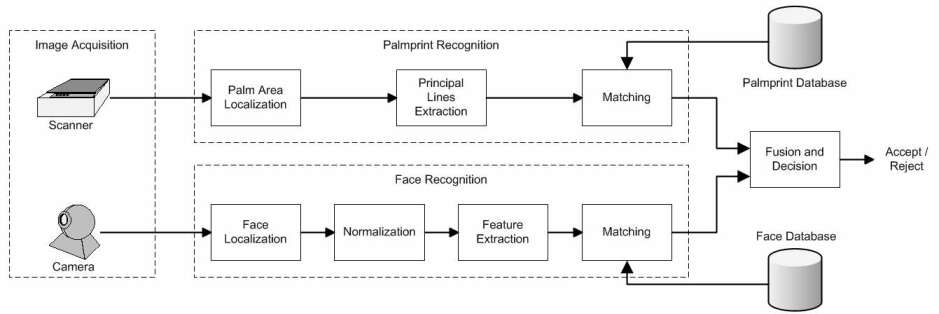

Architecture of the proposed multimodal biometric verification system

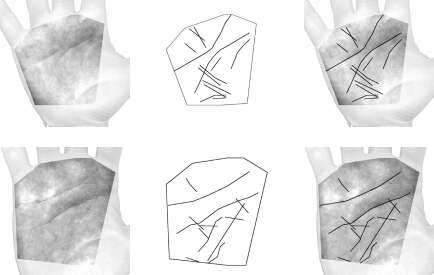

Fig. 1. shows the block-diagram of the proposed bimodal biometric verification system. In the image-acquisition phase the palm and facial images are acquired using a low-cost scanner and a web camera, respectively. The processing of these images, up until fusion, is carried out separately in the palmprint recognition and the face recognition subsystems. Matching scores from both recognition modules are combined into a unique matching score using fusion at the matching-score level. Based on this unique matching score, a decision about whether to accept or reject a user is made. Palmprint recognition subsystemIn the first phase of the palmprint recognition process the area of the palm is located on the basis of the hand contour and the stable points. The extracted palmprint area is hexagonal (Fig. 2d). In the second phase the principal lines of the palm are extracted using line-detection masks and a line-tracking algorithm. Finally, a live-template based on the principal palm lines is matched to the templates from the palmprint database using an approach similar to the HYPER method. Face recognition subsystemThe process of face recognition consists of four phases: face localization based on the Hough method; normalization, including geometry and lighting normalization; feature extraction using eigenfaces (the learning stage uses the Karhunen-Loeve transformation); and finally, matching of the live-template to the templates stored in the face database. Fusion and decisionThe final decision about whether to accept or reject a user (does the person's identity match the claimed ID or PIN code) is made in the fusion/decision module. Matching scores from both recognition modules are combined into a unique matching score using fusion at the matching-score level. This unique matching score is compared to a predefined threshold and a decision about whether to accept or reject the person's authorisation is made. Program modules for preprocessing the palmprint images, template generation and palmprint recognitionIn order to localize the palm area, the first step is to preprocess the palm images; this involves Gaussian smoothing and contrast enhancement. Fig. 2a. is captured by a scanner, Fig. 2b. shows the result of preprocessing. Standard global thresholding is used for the segmentation of the hand. After that, a contour-following algorithm is used to extract the hand contour (Fig. 2c). Based on the stable points on the contour, the palm area, which is approximated by a hexagonal area, is determined. Fig. 2d presents the extracted region of interest.

Process of principle lines extraction begins with convolving the grey-scale palmprint area by four line detection masks. Fig. 3 presents the results of applying the line detection masks to the palm area.

After applying a line-tracking algorithm, a set of lines is obtained. Examples of the palm-line extraction are presented in Figure 4.

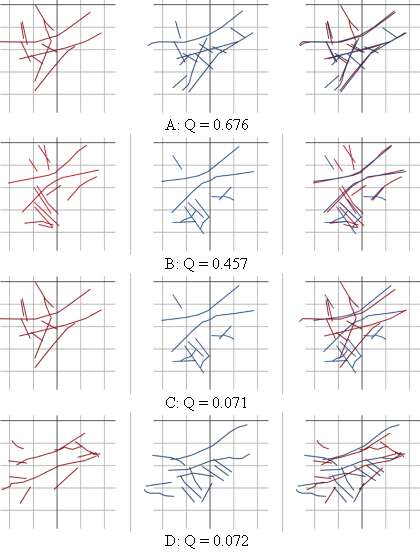

The extracted lines are described in a hand-coordinate system that is based on the stable points on the hand contour; this makes them invariant to hand translation and rotation (relatively to the sensor). Program modules for matching of the live-template and the template from the database (hypotheses generation and evaluation) are based on the adapted method of Ayache and Faugeras (1986). After all the hypotheses have been evaluated, the final palmprint-similarity measure is computed. Fig. 5 shows the similarity measures computed for several palmprint template pairs.

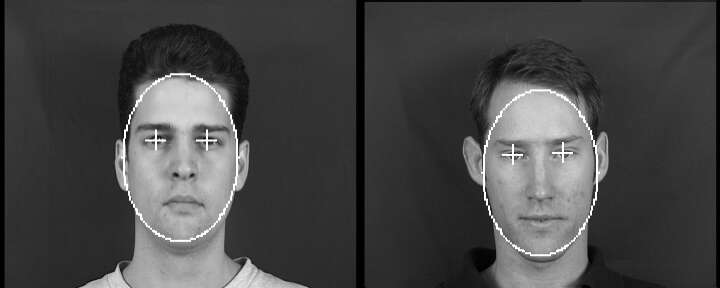

Program modules for preprocessing the face images, template generation and face recognitionFaces in images are localized using an approach that combines the Hough method and skin-colour information for face localization. When a face candidate is selected, the system proceeds by locating the eyes using a simple neural network. In Figure 6 two examples of face localization are presented.

Since the K-L transform is used for matching, a normalization procedure is required. Face normalization consists of geometry normalization, background removal and lighting normalization. The images of the faces are normalized to a fixed size of 64x64 pixels. In Figure 7, several images after the normalization phase are shown.

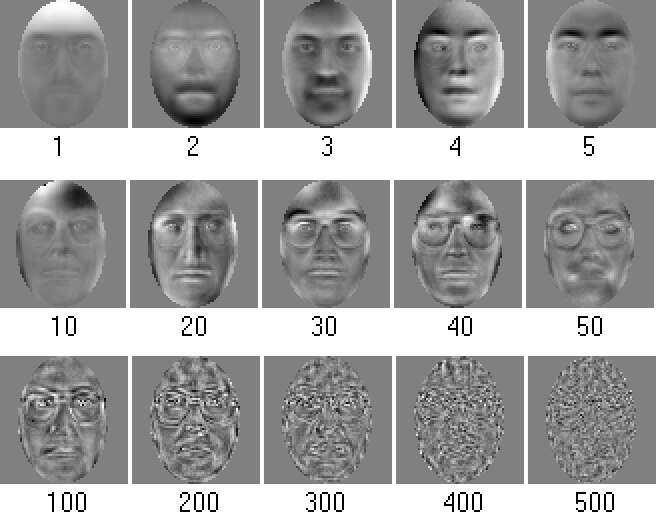

Facial feature extraction is based on the eigenface technique. It is based on the K-L transform applied to a set of facial images. The feature subspace (face-space) is defined by the largest m eigenvectors of the covariance matrix of the set of images (m = 111).. Some of the eigenfaces obtained using our database are presented in Figure 8.

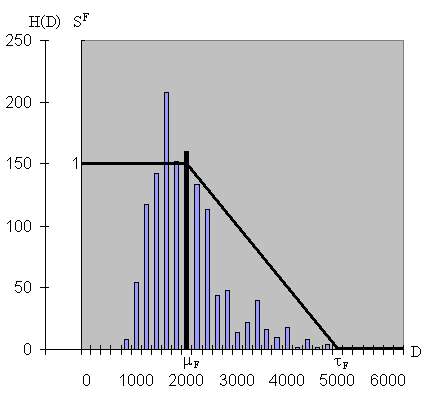

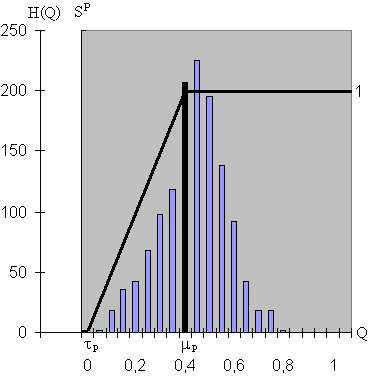

The face template consists of this 111-component feature vector obtained by projecting the face image onto eigenface-subspace. The matching score between two face-feature vectors is calculated using the Euclidean distance in the matching phase. Fusion and decisionIn our bimodal biometric system the fusion is performed at the matching-score level. When trying to verify the identity of an unknown sample we receive two sets of scores from the two independent matching modules (palmprint recognition module and face recognition module). In order to generate the unique matching score we need a way to combine individual matching scores from face- and palmprint-matching modules. Since the palmprint-matching scores and the face-matching scores come in different ranges, a normalization has to be performed before they are combined. The normalization is carried out by means of two transition functions. Fig. 9 illustrates the functions SP and SF, based on the frequency distribution of the palmprint similarities and facial distances.

The final matching score, expressed as the total-similarity measure (TSM), is calculated as a linear combination of the largest palm- and face-similarity measures:

where wP and wF are weighting factors associated with the palm and the face, respectively, and fulfil the condition wP + wF = 1. The weighting factors were set experimentally, based on the preliminary unimodal verification results obtained on the training database. The final decision about whether to accept or reject a user is made by comparing the TSM with the verification threshold, T. If TSM > T, the user is accepted; otherwise, he/she is rejected. Performance EvaluationTo evaluate the performance of the system a database containing palm and face samples was required. The XM2VTS frontal-face-images database was used as the face database. We collected the hand database ourselves using a scanner. The spatial resolution of the hand images is 180 dots per inch (dpi) / 256 grey levels. As the hand and the face databases contain samples belonging to different people, a "chimerical" multimodal database was created using pairs of artificially matched palm and face samples that were made for testing purposes. Some examples are shown in Fig. 10.

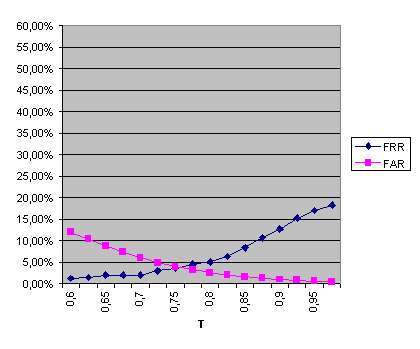

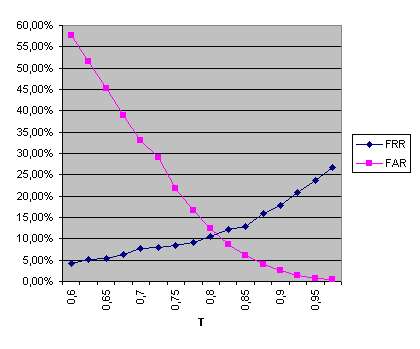

The database was divided into two sets: the training set and the testing set. The training set consisted of 440 image pairs of 110 people (4 image pairs per person). The testing dataset consisted of 1048 image pairs of 131 people (8 image pairs per person). The tests consisted of 393 (131 x 3) valid-client experiments and 51,090 (131 x 3 x 130) impostor experiments. The results of the experiments, expressed in the terms of FRR (false rejection rate) and FAR (false acceptance rate), vary depending on the selected verification threshold T. We first evaluated the unimodal performance of the system. The obtained results are presented in Fig. 11.a for the palmprint modality and Fig. 11.b for the face modality.

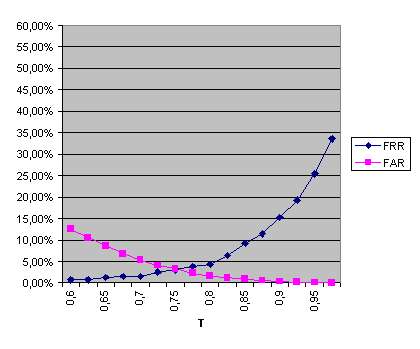

The palmprint modality produces an EER (equal error rate) of 3.82% for T = 0.755, while the face modality produces an EER of 10.87% for T = 0.81. The minimum TER (total error rate) obtained using the palmprint features is 7.66% with T = 0.8, while the minimum TER obtained using the facial features is 18.97% with T = 0.85. Combining both modalities using fusion at the matching-score level the performance is improved, as shown in Fig. 12.

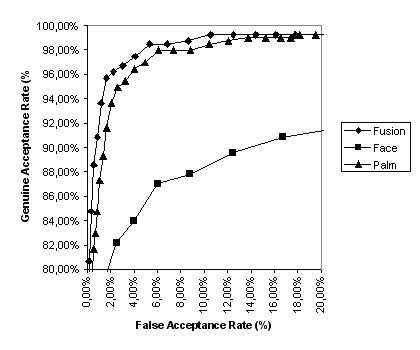

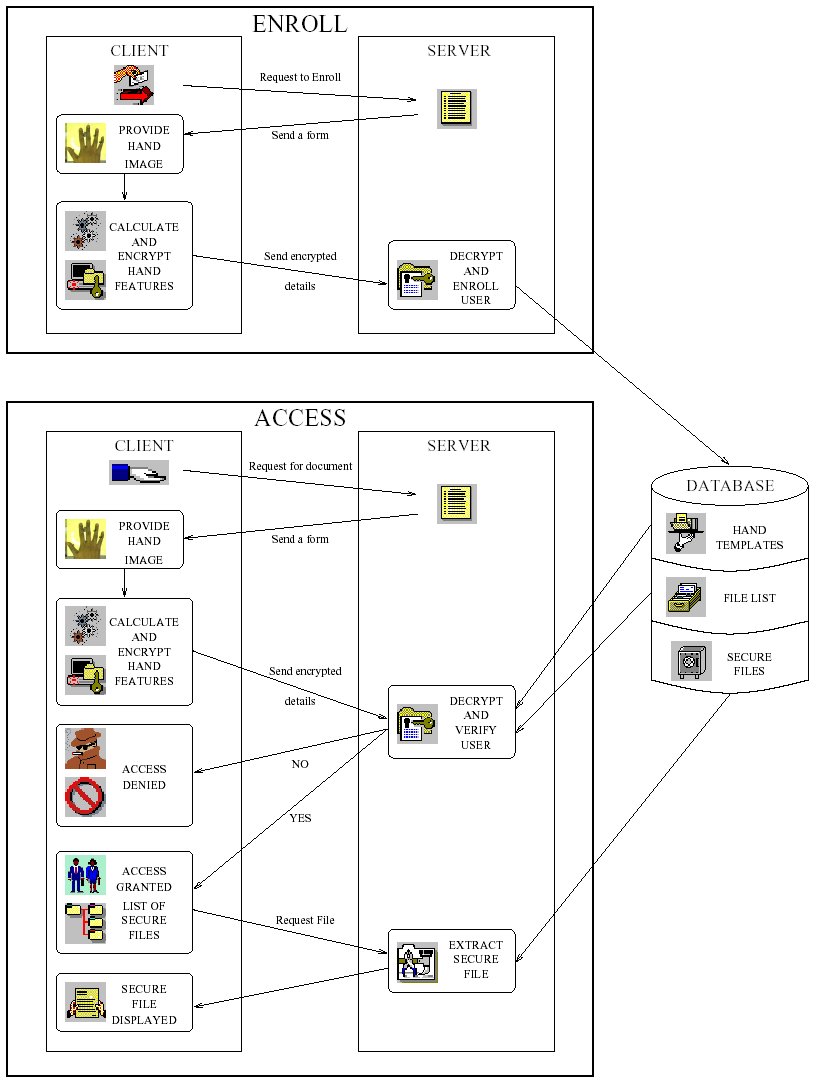

There, it can be seen that the fusion of palmprint and facial features improves the verification score, both by reducing the EER from 3.82% to 3.08 and by reducing the minimum TER from 7.66% to 5.94%. The comparison of all three systems (two unimodal and a bimodal system) is given in Figure 12b. Program modules for web-based enrollment and verificationPerson authentication is the process of determining whether someone is, in fact, who is declared to be. Biometric personal authentication is based on physiological and behavioural characteristics of an individual that distinguish one person from another. The most common physiological and characteristics of an individual used for automatic biometric authentication are as follows: fingerprint, hand-geometry, palm-print, face, iris, retina, DNA and hand veins. The most usual behavioural characteristics are: speech, signature, gesture, gait and keystroke dynamics. Two authentication methods are distinguished in biometrics: verification and identification. Verification is one-to-one process that compares the biometric information presented by an individual (the live-template) with biometric information stored in a database (user's template). The user's template is selected from a database by means of user's PIN or ID. Thus verification can be treated as combination of authentication modes based on what user knows or possesses (PIN, password, ID) and biometric features. Identification is a one-to-many process that compares the live-template with all user's templates stored in a database. Identification is based only on biometric measurements. Both authentication methods (verification and identification) require the biometric enrollment of the authorised users. During the enrollment phase, the system captures the chosen biometric features of the user, as well as other information about the user (name, ID number etc.). Based on the biometric characteristics, the relevant information is extracted and stored in the database as the user's template. Additionally, some kind of ID can be generated for the enrolled person, to be associated with the user's template and used in the verification procedure. Within this IT project, program modules for web-based enrollment and authentication have been developed. The sequence of interactions between client and server parts of the application is illustrated in Fig. 13.

The details of the implementation of secure data transfer protocol for this application can be found in documentation (in Croatian). Conclusion and project summaryWithin this IT project we developed a prototype biometric system for Internet users verification. The implemented biometric system is based on fusion of the features of the palmprint and the face. The system evaluation results show that the implemented fusion at the matching-score level improves the system performance. This combination of palmprint or facial features improves the biometric authentication robustness to fraudulent methods of accessing physical or logical resources. At the same time, the fact that the face image is being acquired has a psychological impact reducing the probability of fraudulent attempts. The demonstration of the implemented system can be tested off-line. The .exe program and a sample user database, together with some "intruder" images can be downloaded at: (Setup program). The User's manual is available as (PDF). The on-line demonstration of the implemented system requires the user to have a web-camera and a scanner (and Internet connection). Also, a client application has to be installed (Setup program). The User's manual is available as (PDF). If you are experiencing problems with the installation or the application itself, you can contact ivan.fratric@fer.hr. Documentation and papers

|

|

Copyright(c)

Department of Electronics, Microelectronics, Computer and Intelligent Systems

|

Zoran Kalafatić |