Multi-domain semantic segmentation with overlapping labels

-

we enable training and evaluation

over incompatible taxonomies

-

our method expresses each dataset class

as a union of disjoint universal classes.

-

the resulting model won the semantic segmentation contest at

Robust Vision Challenge at ECCV 2020

and set the new state of the art on

WildDash 2.

|

[bevandic22wacv]

[bevandic22wacv]

|

|

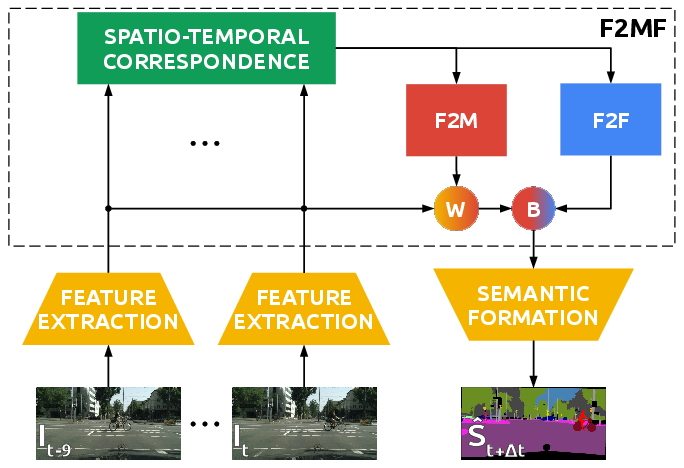

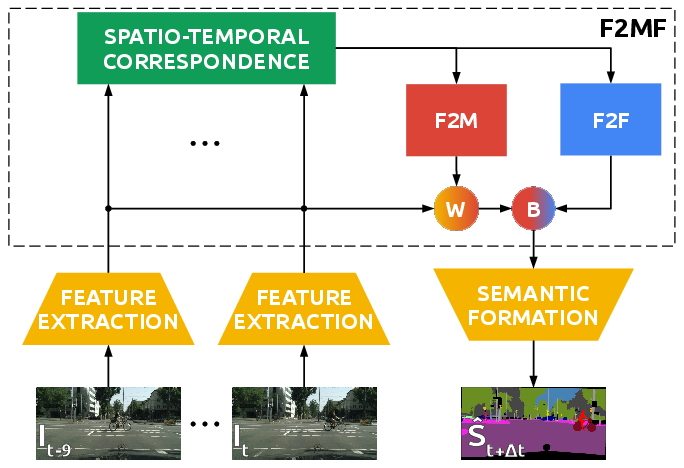

Dense Semantic Forecasting in Video

by Joint Regression of Features and Feature Motion

-

we express dense semantic forecasting

as a causal relationship between the past and the future

-

we complement convolutional features

with their respective correlation coefficients

across a small set of discrete displacements

-

our single-frame model

does not use skip connections along

the upsampling path;

hence we are able to forecast

condensed abstract features at R/32

-

we present experiments

for three dense prediction tasks:

semantic segmentation,

instance segmentation

and panoptic segmentation`

|

[saric21tnnls]

[saric21tnnls]

|

|

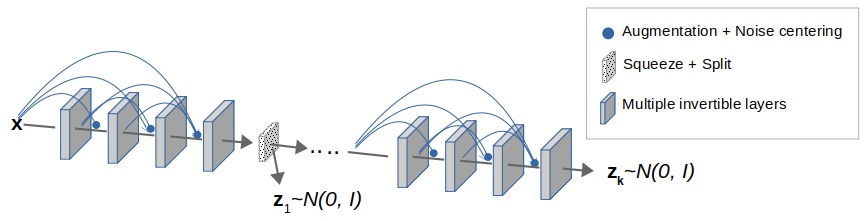

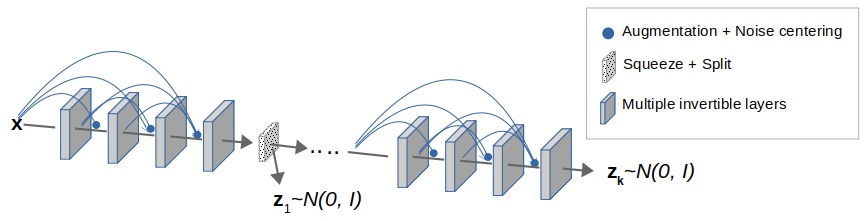

Densely connected normalizing flows

-

we show how to apply dense connectivity

to normalizing flows

-

the resulting inductive bias assumes

that some useful features are easier to compute than the others

-

however, straight-forward skip-connections preclude bijectivity

-

hence, we concatenate the noise

after conditioning on previous representations

|

[grcic21neurips]

[grcic21neurips]

|

|

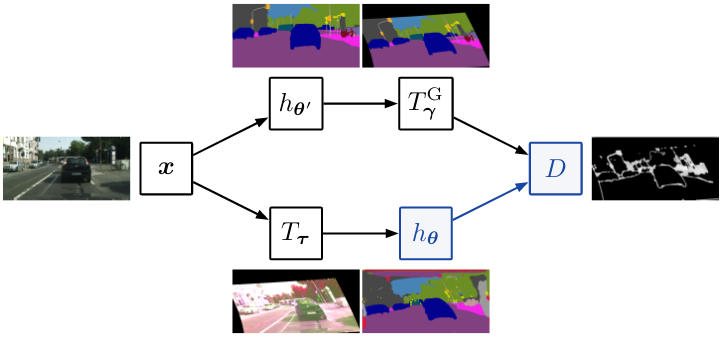

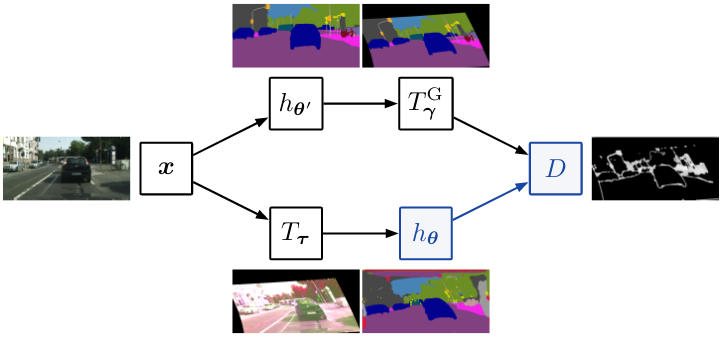

A baseline for semi-supervised learning of efficient semantic segmentation models

-

we show that one-way consistency with clean teacher

outperforms other forms of consistency

(e.g. clean student or two-way) both in terms

of generalization performance and memory efficiency

-

we propose a competitive perturbation model

as a composition of a geometric warp and photometric jittering

-

we observe that simple consistency scales better than Mean Teachers

in presence of more labels or more unlabeled data

-

we experiment on efficient models due to their importance

for real-time and low-power applications.

|

[grubisic21mva]

[grubisic21mva]

|

[bevandic22wacv]

[bevandic22wacv]

[saric21tnnls]

[saric21tnnls]

[grcic21neurips]

[grcic21neurips]

[grubisic21mva]

[grubisic21mva]